AI Docking vs. Traditional Docking: What the 2025 Benchmarks Really Tell Us

By the Pauling.AI Team

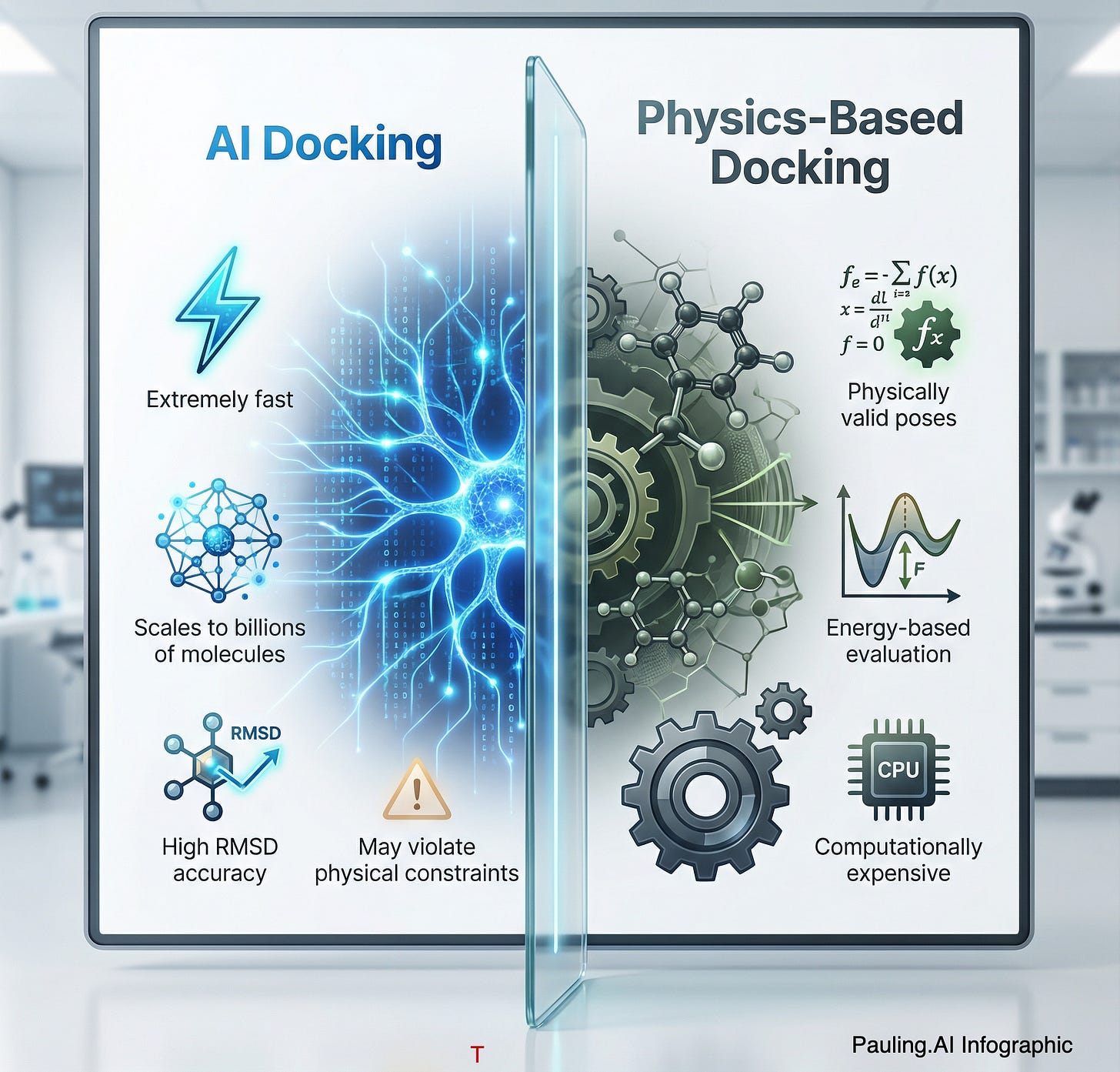

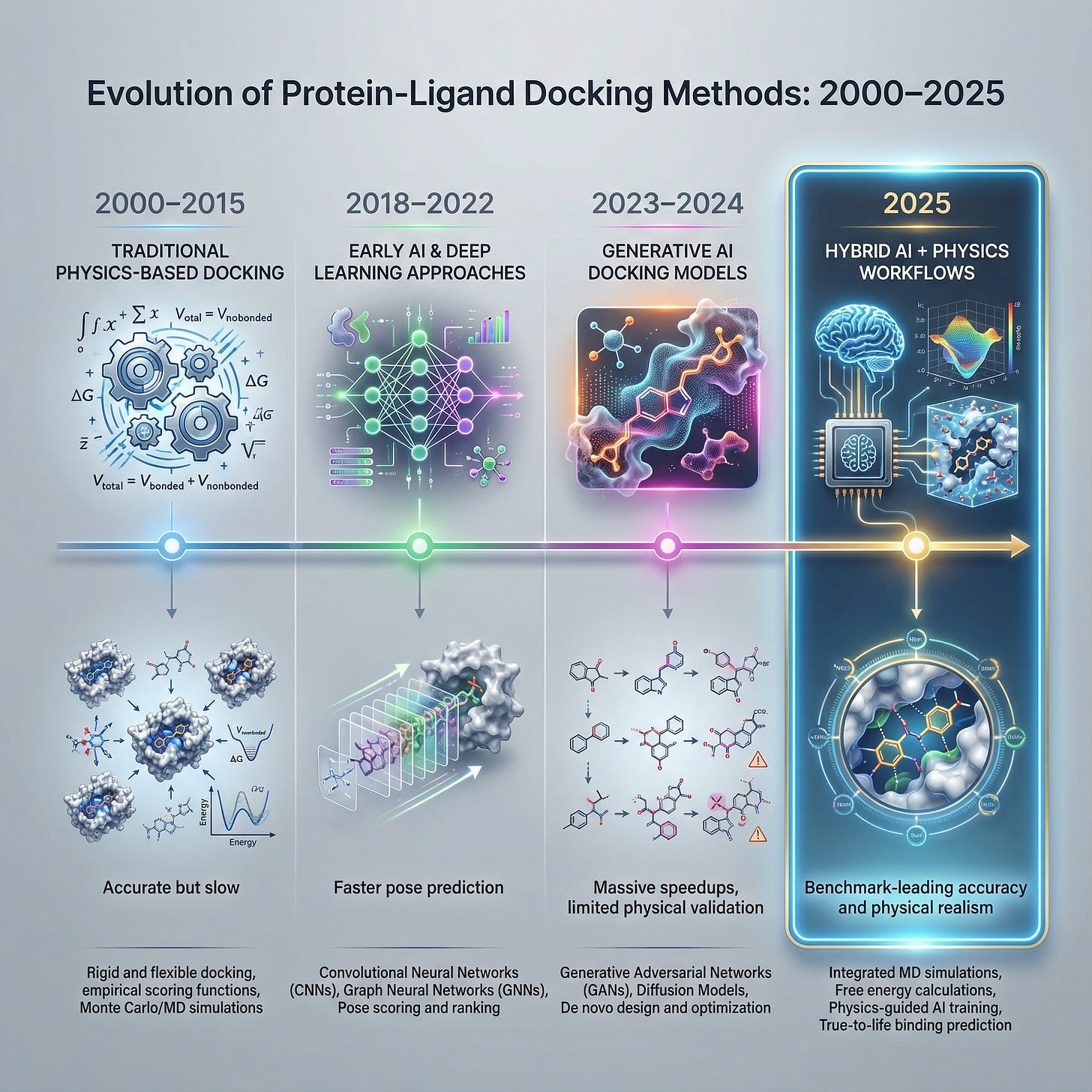

For decades, computational chemistry had a “performance limitation.” Traditional physics-based docking tools like AutoDock Vina and Glide were the gold standard reliable, explainable, but painfully slow. Then came the generative AI wave, promising to solve protein-ligand interactions in seconds rather than hours [1].

Now that we are well into 2025, the hype has settled, and the hard data is in. New benchmarks like PoseX and PoseBench have pitted the latest AI models (AlphaFold 3, DiffDock, Boltz-1x) against the physics-based old guard.

The results are not a simple victory for one side [2]. Instead, they point to a singular conclusion: The future isn’t AI

Here is what the 2025 data actually says and why Pauling.AI’s infrastructure is built the way it is.

1. Performance: AI Is the “Undisputed King”

If your goal is to scan a massive library of 10 billion compounds to find a “general fit,” traditional docking is obsolete.

The Data: In the 2025 PoseX benchmark, some of AI-driven tools (like AlphaFold3 and Uni-Mol) could demonstrate a significantly higher “success rate” (finding a pose <2Å RMSD) compared to classical force-field methods.[1]

The Gap: Physics-based tools explore conformational space via sampling (simulated annealing or genetic algorithms), which could be computationally expensive. AI models, treating docking as a geometric deep learning problem, “infer” the pose in milliseconds.

The Reality Check: For finding a known binding pocket of a target, AI is 1,000x faster.

2. Stereochemical Validity: The Key Weakness of Pure AI

However, speed comes at a cost. The 2025 benchmarks revealed a critical weakness in pure AI methods: stereochemical validity.

The Data: While AI models often get the RMSD (Root Mean Square Deviation) right, they frequently produce “physically impossible” structures. The PoseBusters benchmark suite highlights that pure generative models often ignore steric clashes, creating atoms that overlap or bond angles that defy the laws of physics.

The Consequence: An AI might tell you a drug binds perfectly, but if you ran that molecule through a molecular dynamics (MD) simulation, like by GROMACS, the structure would explode. Pure AI lacks an inherent understanding of energy landscapes; it only knows geometric probability.

3. The 2025 Winner: The Hybrid Workflow

This brings us to the most important finding of the year. The highest accuracy scores in 2025 weren’t achieved by AI alone or Physics alone. They were achieved by AI generation followed by Physics relaxation [4].

Recent studies show that when an AI-predicted pose (from a tool like DiffDock) is refined with a quick physics-based minimization step (using a force field), accuracy jumps significantly.

Why it works: The AI acts as a “hyper-efficient guide,” bypassing the slow global search to find the right energy valley. The Physics engine then “cleans up” the mess, resolving steric clashes and optimizing hydrogen bonds.

The Pauling.AI Approach: This is exactly why our “AI Chemist” agents don’t just use LLMs. We also integrate Uni-Dock for rapid pose generation and then validate those poses using rigorous MD simulations (GROMACS). We treat AI as the architect and Physics as the engineer.

4. AlphaFold 3 & The “Co-Folding” Era

A special mention must go to AlphaFold 3 and Boltz-1x [3]. In 2025, these “co-folding” models (folding the protein around the ligand) have revolutionized targets where the crystal structure is unknown.

The Benchmark: While they trail specialized docking tools in known pockets, they are unrivaled for blind docking (where the binding site is unknown).

The Insight: For targets with high flexibility (induced fit), traditional rigid-receptor docking fails. AI co-folding is the only viable path forward.

Conclusion: Don’t Choose, Integrate.

The “AI vs. Physics” debate is a false dichotomy. The 2025 benchmarks prove that:

AI solves the search problem (Speed).

Physics solves the validity problem (Accuracy).

At Pauling.AI, we are not building a tool that blindly trusts a neural network. We are building autonomous agents that use AI to hypothesize and Physics to verify compressing years of discovery into days without sacrificing scientific rigor.

References & Further Reading (2025)

PoseX Benchmark: AI Defeats Physics-based Methods on Protein Ligand Cross-Docking (arXiv:2505.03) [1]. This paper introduced the PoseX dataset, showing AI models surpassing Glide in RMSD accuracy but requiring physics-based relaxation for validity.

Werner, J. (2025, June 10). AlphaFold 3 extends modeling capacity to more biological targets. Forbes. This article discusses how AlphaFold 3 expands beyond classical protein structure prediction to model a broader range of biomolecular structures, including ligand and ion interactions, greatly extending its applicability in computational biology and drug discovery [2].

Masters et al. (2025) describe how AlphaFold 3 (AF3) [3] and similar “co-folding” approaches jointly predict protein and small-molecule complexes an ability that aligns with what you describe as “co-folding” (folding the protein around the ligand). This work shows co-folding models can achieve high performance in blind docking tasks (predicting ligand binding without a known pocket) relative to conventional algorithms, highlighting their suitability for targets with unknown binding sites and induced-fit flexibility.

Gómez Borrego & Torrent Burgas (2025) evaluated protein ligand docking methods and reported that using AlphaFold-predicted structures followed by molecular dynamics (MD) refinement leads to improved docking outcomes, demonstrating how combining data-driven AI predictions with physics-based refinement can enhance the physical realism and predicted binding quality of complexes [4].

Nature Machine Intelligence: Benchmarking AI-powered docking methods from the perspective of virtual screening (Feb 2025).[5][6][7] Highlighted that while AI is faster, it struggles with “physical plausibility” compared to traditional tools [1].

PoseBusters: The industry standard for checking if AI-generated molecules obey the laws of physics (no overlapping atoms).

Subscribe to the Pauling.AI blog for data-driven insights on AI-powered drug discovery.

References: